What will be covered in this post:

This week we will create a clothes classification service in the cloud to identify images we upload and send.

We will use AWS Lambda to serve our model. We can upload an image and send the URL to Lambda, which returns our predicted class.

We will use our previous clothes classification model, convert it to TensorFlow lite, package it up using Docker, and then upload the image to serve from AWS in the cloud.

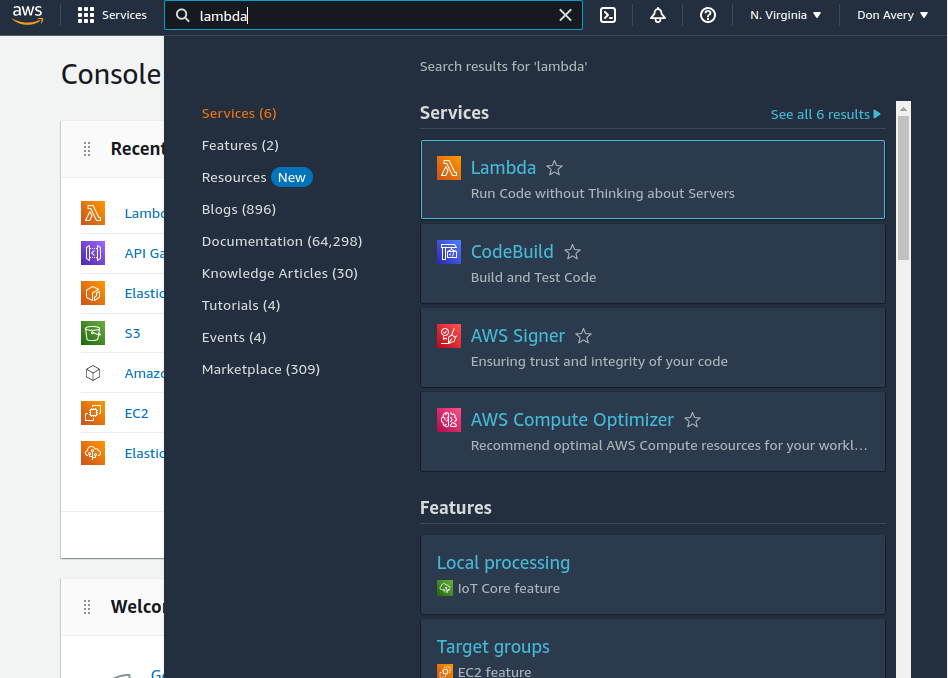

AWS Lambda

Lambda allows us to run code on AWS without worrying about creating services or instances, hence the “serverless” name. First, we will create a new function inside Lambda and choose the “Author from scratch” option. Next, we will give our function a name, select the runtime language we want to use and the architecture, and then hit “Create function.”

Once the function is created, we will see the screen with information about our function. Since we chose Python as our runtime language, the function contains “lambda-function.py.” We will modify this for our demonstration. Finally, we will have our function print the event and return “PONG” when it is triggered.

Now we will click on the “Test” button and name our test event “test” and “save.” Now we have to click the “Deploy” button for our changes to take effect. When we click “Test” again, we will see the response.

Using TensorFlow lite allows us to reduce our cost for storage and speeds up our initialization.

Tensorflow lite only focuses on inference or, in other words, prediction. Therefore, Tensorflow lite can’t be used to train a model.

The first step for this process will be to load a previously trained model to deploy. This Jupyter notebook shows the steps to convert a Keras model to tflite.

First we will import the required packages to start.

import numpy as np

import tensorflow as tf

from tensorflow import keras

We have a pre-trained model located on github that we will download using !wget.

!wget https://github.com/alexeygrigorev/mlbookcamp-code/releases/download/chapter7-model/xception_v4_large_08_0.894.h5 -O clothing-model.h5

--2022-11-26 22:30:59-- https://github.com/alexeygrigorev/mlbookcamp-code/releases/download/chapter7-model/xception_v4_large_08_0.894.h5

Resolving github.com (github.com)... 140.82.121.4

Connecting to github.com (github.com)|140.82.121.4|:443... connected.

Saving to: 'clothing-model.h5'

0K .......... .......... .......... .......... .......... 0% 265K 5m18s

84150K .......... ..... 100% 330K=6m52s

2022-11-26 22:37:53 (204 KB/s) - 'clothing-model.h5' saved [86185888/86185888]

--2022-11-26 22:36:35-- https://github.com/alexeygrigorev/mlbookcamp-code/releases/download/chapter7-model/xception_v4_large_08_0.894.h5

Resolving github.com (github.com)... 140.82.121.4

Connecting to github.com (github.com)|140.82.121.4|:443... connected.

Saving to: 'clothing-model.h5'

0K .......... .......... .......... .......... .......... 0% 92.5K 15m9s

84100K .......... .......... .......... .......... .......... 99% 106K 0s

84150K .......... ..... 100% 199K=6m14s

2022-11-26 22:42:50 (225 KB/s) - 'clothing-model.h5' saved [86185888/86185888]

Assign the model to the variable model.

model = keras.models.load_model('clothing-model.h5')

Now we will download an image form github, again, using !wget.

!wget http://bit.ly/mlbookcamp-pants -O pants.jpg

--2022-11-26 22:42:54-- http://bit.ly/mlbookcamp-pants

Resolving bit.ly (bit.ly)... 67.199.248.11, 67.199.248.10

Connecting to bit.ly (bit.ly)|67.199.248.11|:80... connected.

HTTP request sent, awaiting response... 301 Moved Permanently

Location: https://raw.githubusercontent.com/alexeygrigorev/clothing-dataset-small/master/test/pants/4aabd82c-82e1-4181-a84d-d0c6e550d26d.jpg [following]

--2022-11-26 22:42:54-- https://raw.githubusercontent.com/alexeygrigorev/clothing-dataset-small/master/test/pants/4aabd82c-82e1-4181-a84d-d0c6e550d26d.jpg

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 185.199.108.133, 185.199.109.133, 185.199.110.133, ...

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|185.199.108.133|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 23048 (23K) [image/jpeg]

Saving to: 'pants.jpg'

0K .......... .......... .. 100% 88.6K=0.3s

2022-11-26 22:42:56 (88.6 KB/s) - 'pants.jpg' saved [23048/23048]

We will use load_img to load the image and preprocess_input to process the image.

from tensorflow.keras.preprocessing.image import load_img

from tensorflow.keras.applications.xception import preprocess_input

We will turn the img into a numpy array, and turn the array into a batch of 1 image and the preprocess the image.

img = load_img('pants.jpg', target_size=(299, 299))

x = np.array(img)

X = np.array([x])

X = preprocess_input(X)

img

We see the shape.

X.shape

(1, 299, 299, 3)

Next we can peform a prediciton on the image.

preds = model.predict(X)

Now we can see the results of the prediction.

preds

array([[-1.8681757, -4.7601914, -2.317127 , -1.0633811, 9.885927 ,

-2.8119776, -3.6656034, 3.1996472, -2.6022573, -4.8351283]],

dtype=float32)

Below is a list of the classes within our model.

classes = [

'dress',

'hat',

'longsleeve',

'outwear',

'pants',

'shirt',

'shoes',

'shorts',

'skirt',

't-shirt'

]

Next we can zip classes and preds together and turn them into a dictionary to see the predictions lined up with the classes.

dict(zip(classes, preds[0]))

{'dress': -1.8681757,

'hat': -4.7601914,

'longsleeve': -2.317127,

'outwear': -1.0633811,

'pants': 9.885927,

'shirt': -2.8119776,

'shoes': -3.6656034,

'shorts': 3.1996472,

'skirt': -2.6022573,

't-shirt': -4.8351283}

Convert Keras to TF-Lite¶

tf.lite is included in tensorflow. We will first create a converter using tf.lite.TFLiteConverter and run that on our model. tensorflow conversion

converter = tf.lite.TFLiteConverter.from_keras_model(model)

tflite_model = converter.convert()

with open('clothing-model.tflite', 'wb')as f_out:

f_out.write(tflite_model)

INFO:tensorflow:Assets written to: C:\Users\daver\AppData\Local\Temp\tmp61gc3jpk\assets

C:\Users\daver\miniconda3\envs\tf_gpu\lib\site-packages\keras\utils\generic_utils.py:494: CustomMaskWarning: Custom mask layers require a config and must override get_config. When loading, the custom mask layer must be passed to the custom_objects argument.

warnings.warn('Custom mask layers require a config and must override '

!dir

Volume in drive C is Windows

Volume Serial Number is 061A-7F5F

Directory of C:\Users\daver\Desktop\DataScience\zoomcamp\Week_9

11/26/2022 11:00 PM <DIR> .

11/22/2022 07:10 PM <DIR> ..

11/21/2022 10:28 PM <DIR> .ipynb_checkpoints

11/21/2022 08:55 PM 0 AWS Lambda.txt

12/07/2021 03:41 PM 86,185,888 clothing-model.h5

11/26/2022 11:00 PM 83,999,908 clothing-model.tflite

11/21/2022 11:04 PM 271 Dockerfile

11/23/2022 06:42 AM <DIR> homework

11/21/2022 10:52 PM 936 lambda_function.py

11/26/2022 10:42 PM 23,048 pants.jpg

11/26/2022 11:00 PM 445,091 tensorflow-model.ipynb

11/21/2022 10:42 PM 825 tensorflow-model.py

11/21/2022 10:52 PM <DIR> __pycache__

8 File(s) 170,655,967 bytes

5 Dir(s) 149,050,187,776 bytes free

We will import tensorflow.lite so that we are independent of tensorflow.

import tensorflow.lite as tflite

Load the model, and set the input and output index.

interpreter = tflite.Interpreter(model_path='clothing-model.tflite')

interpreter.allocate_tensors()

input_index = interpreter.get_input_details()[0]['index']

output_index = interpreter.get_output_details()[0]['index']

interpreter.get_input_details()

[{'name': 'input_8',

'index': 0,

'shape': array([ 1, 299, 299, 3]),

'shape_signature': array([ -1, 299, 299, 3]),

'dtype': numpy.float32,

'quantization': (0.0, 0),

'quantization_parameters': {'scales': array([], dtype=float32),

'zero_points': array([], dtype=int32),

'quantized_dimension': 0},

'sparsity_parameters': {}}]

interpreter.get_output_details()

[{'name': 'Identity',

'index': 229,

'shape': array([ 1, 10]),

'shape_signature': array([-1, 10]),

'dtype': numpy.float32,

'quantization': (0.0, 0),

'quantization_parameters': {'scales': array([], dtype=float32),

'zero_points': array([], dtype=int32),

'quantized_dimension': 0},

'sparsity_parameters': {}}]

We set the input, invoke the model and collect the output.

interpreter.set_tensor(input_index, X)

interpreter.invoke()

preds = interpreter.get_tensor(output_index)

classes = [

'dress',

'hat',

'longsleeve',

'outwear',

'pants',

'shirt',

'shoes',

'shorts',

'skirt',

't-shirt'

]

dict(zip(classes, preds[0]))

{'dress': -1.8681757,

'hat': -4.7601914,

'longsleeve': -2.317127,

'outwear': -1.0633811,

'pants': 9.885927,

'shirt': -2.8119776,

'shoes': -3.6656034,

'shorts': 3.1996472,

'skirt': -2.6022573,

't-shirt': -4.8351283}

Removing TF dependency¶

We can search around and find how keras imports and image and we find that the package uses Pil to import and open images. keras-preprocessing on GitHub and we will use that here instead of depending on keras.

from PIL import Image

with Image.open('pants.jpg') as img:

img = img.resize((299, 299), Image.NEAREST)

C:\Users\daver\AppData\Local\Temp\ipykernel_29580\2391656872.py:2: DeprecationWarning: NEAREST is deprecated and will be removed in Pillow 10 (2023-07-01). Use Resampling.NEAREST or Dither.NONE instead. img = img.resize((299, 299), Image.NEAREST)

We need to find out how keras was performing the preprocessing and we can find that at GitHub again and we can create a short function.

def preprocess_input(x):

x /= 127.5

x -= 1.

return x

#img = load_img('pants.jpg', target_size=(299, 299))

x = np.array(img, dtype='float32')

X = np.array([x])

X = preprocess_input(X)

interpreter.set_tensor(input_index, X)

interpreter.invoke()

preds = interpreter.get_tensor(output_index)

classes = [

'dress',

'hat',

'longsleeve',

'outwear',

'pants',

'shirt',

'shoes',

'shorts',

'skirt',

't-shirt'

]

dict(zip(classes, preds[0]))

{'dress': -1.8682916,

'hat': -4.7612457,

'longsleeve': -2.316979,

'outwear': -1.0625672,

'pants': 9.8871565,

'shirt': -2.8124275,

'shoes': -3.666287,

'shorts': 3.2003636,

'skirt': -2.6023414,

't-shirt': -4.8350444}

The simple way of doing this¶

We can use the keras-image-helper package that is a "A lightweight library for pre-processing images for pre-trained keras models" keras-image-helper

We will first install and then load the library.

!pip install keras-image-helper

Collecting keras-image-helper Downloading keras_image_helper-0.0.1-py3-none-any.whl (4.6 kB) Requirement already satisfied: numpy in c:\users\daver\miniconda3\envs\tf_gpu\lib\site-packages (from keras-image-helper) (1.23.4) Requirement already satisfied: pillow in c:\users\daver\miniconda3\envs\tf_gpu\lib\site-packages (from keras-image-helper) (9.2.0) Installing collected packages: keras-image-helper Successfully installed keras-image-helper-0.0.1

GitHub tflite-runtime instructions

This allows us to install tflite-runtime with using tensorflow.

!pip install --extra-index-url https://google-coral.github.io/py-repo/ tflite-runtime

Looking in indexes: https://pypi.org/simple, https://google-coral.github.io/py-repo/

Collecting tflite-runtime

Downloading https://github.com/google-coral/pycoral/releases/download/v2.0.0/tflite_runtime-2.5.0.post1-cp39-cp39-win_amd64.whl (867 kB)

------------------------------------ 867.1/867.1 kB 332.4 kB/s eta 0:00:00

Requirement already satisfied: numpy>=1.16.0 in c:\users\daver\miniconda3\envs\tf_gpu\lib\site-packages (from tflite-runtime) (1.23.4)

Installing collected packages: tflite-runtime

Successfully installed tflite-runtime-2.5.0.post1

And now we can hash out the line importing tensorflow.lite from tensorflow and uses the tflite_runtime instead. This allows us to package up the smallest container to use on AWS lambda.

# import tensorflow.lite as tflite

import tflite_runtime.interpreter as tflite

from keras_image_helper import create_preprocessor

interpreter = tflite.Interpreter(model_path='clothing-model.tflite')

interpreter.allocate_tensors()

input_index = interpreter.get_input_details()[0]['index']

output_index = interpreter.get_output_details()[0]['index']

preprocessor = create_preprocessor('xception', target_size=(299, 299))

url = 'http://bit.ly/mlbookcamp-pants'

X = preprocessor.from_url(url)

interpreter.set_tensor(input_index, X)

interpreter.invoke()

preds = interpreter.get_tensor(output_index)

classes = [

'dress',

'hat',

'longsleeve',

'outwear',

'pants',

'shirt',

'shoes',

'shorts',

'skirt',

't-shirt'

]

dict(zip(classes, preds[0]))

{'dress': -1.8682901,

'hat': -4.7612457,

'longsleeve': -2.3169823,

'outwear': -1.0625706,

'pants': 9.8871565,

'shirt': -2.8124304,

'shoes': -3.6662836,

'shorts': 3.200361,

'skirt': -2.6023388,

't-shirt': -4.835045}

Preparing the Lambda code

First, we will extract the code from our Jupyter notebook, converting the model from Keras to tflite and creating a script.

We will use nbconvert for this extraction.

jupyter nbconvert --to script 'tensorflow-model.ipynb'Now we have a file named ‘tensorflow-model.py.’ First, we will clean it up by removing everything except the last section of the notebook. Next, we will create a function to predict on a URL and return the zipped dictionary. Finally, we will also rename the file ‘lambda-function’ as this will be the function we create on AWS lambda.

import tflite_runtime.interpreter as tflite

from keras_image_helper import create_preprocessor

interpreter = tflite.Interpreter(model_path='clothing-model.tflite')

interpreter.allocate_tensors()

input_index = interpreter.get_input_details()[0]['index']

output_index = interpreter.get_output_details()[0]['index']

preprocessor = create_preprocessor('xception', target_size=(299, 299))

classes = [

'dress',

'hat',

'longsleeve',

'outwear',

'pants',

'shirt',

'shoes',

'shorts',

'skirt',

't-shirt'

]

#url = 'http://bit.ly/mlbookcamp-pants'

def predict(url):

X = preprocessor.from_url(url)

interpreter.set_tensor(input_index, X)

interpreter.invoke()

preds = interpreter.get_tensor(output_index)

return dict(zip(classes, preds[0]))

def lambda_handler(event, context):

url = event['url']

result = predict(url)

return resultPreparing a Docker image

First, we will create a file named Dockerfile, and we can use prepackaged images from AWS lambda.

We want to use the lambda/python base image, and under ‘Image tags,’ we can find the image we would like to use. We will use an image with Python 3.8.

In our Dockerfile, we will pull the python image and install the dependencies we used in our Jupyter Notebook that installed the keras-image-hander and the tflite_runtime package. After that, we will copy our tflite model and the lambda_function.py file to the current directory. Last we will run a command that invokes the lambda_handler in our lambda_function script.

FROM public.ecr.aws/lambda/python:3.8

RUN pip install keras-image-helper

RUN pip install --extra-index-url \

https://google-coral.github.io/py-repo/ tflite-runtime

COPY clothing-model.tflite .

COPY lambda_function.py .

CMD[ "lambda_function.lambda_handler" ]Now that our docker file is completed, we will build the docker container using the following:

docker build -t clothing-model .The ‘.’ means we use the Dockerfile from the current directory. This will pull all the packages down into the container.

We can now test our Docker container locally using the following:

docker run -it --rm -p 8080:8080 clothing-model:latestThis will start the Docker image, and we can create a test script for our Docker image.

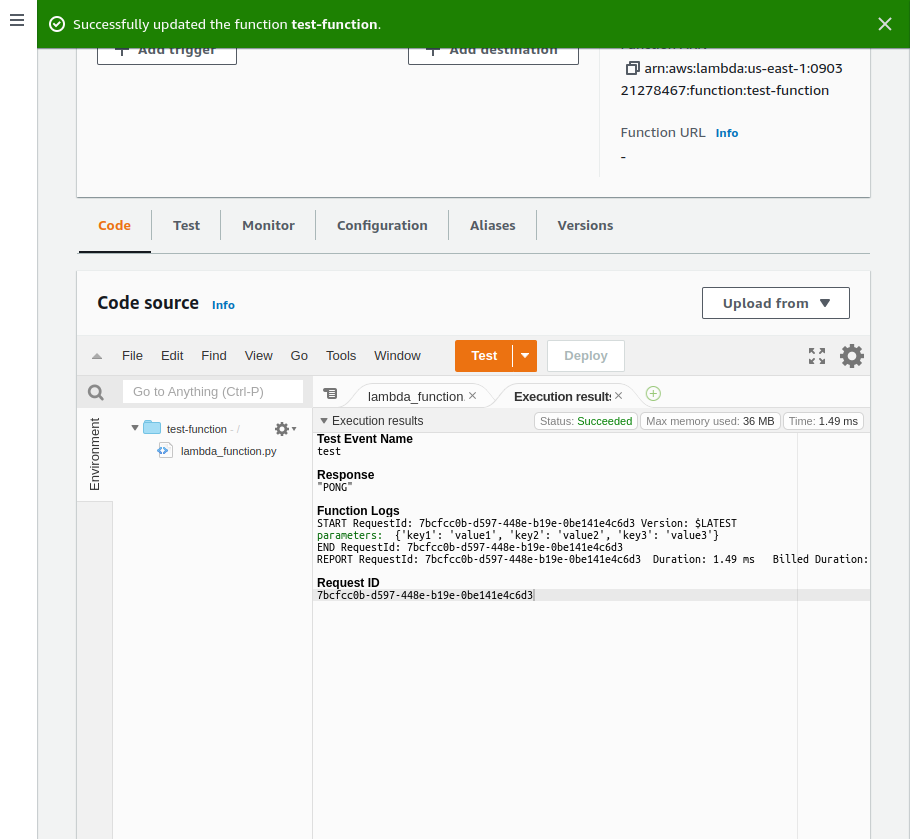

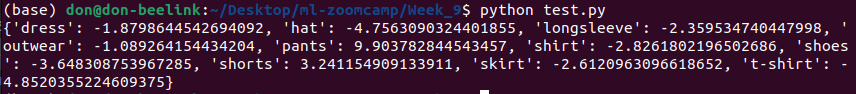

Inside the test.py file, we will import requests and then assign the function invocation to the ‘URL’ variable, the URL for our docker image locally. Next, we will assign the URL of the image of the pants to the ‘data’ variable. Finally, our results will contain the request in JSON format.

import requests

#url = 'http://localhost:8080/2015-03-31/functions/function/invocations'

url = 'https://000000000.execute-api.us-east-1.amazonaws.com/test/predict'

data = {'url': 'http://bit.ly/mlbookcamp-pants'}

result = requests.post(url, json=data).json()

print(result)Now we run the test.py file, and we see our results.

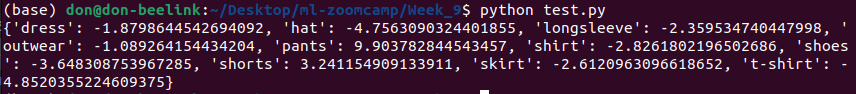

Now we have built and tested our Docker image, so we will now create a lambda function and deploy our Docker image. We will deploy the Docker image to AWS ECR (Elastic Container Registry). Finally, we will create our repository with the command line. If you haven’t done so, you will have to run the ‘pip install awscli.’

We can run the following:

'aws ecr create-repository --repository-name clothing-tflite-images'Which will create a repository on AWS. Now we will log in to that repository using the following:

aws ecr get-login --no-include-emailThis will return the password, which I will not expose here. To use the password immediately without needing an extra step, we can run the following command:

$(aws ecr get-login --no-include-email)Which will retrieve the password and then log you in with one step.

Using the URL that AWS assigned to us when creating the repository for our Docker image, we can use that information to execute a lengthy command to push our docker image to the repository.

ACCOUNT=090321278467

REGION=us-east-1

REGISTRY=clothing-tflite-images

PREFIX=${ACCOUNT}.dkr.ecr.${REGION}.amazonaws.com/${REGISTRY}

TAG=clothing-model-exception-v4-001

REMOTE_URI=${PREFIX}:${TAG}To view the address:

echo ${REMOTE_URI}Now we can tag and push our Docker image to the repository using the following:

docker tag clothing-model:latest ${REMOTE_URI}

docker push ${REMOTE_URI}Now when we look at our AWS ECR repository page, we will see the “clothing-tflite-images” repository.

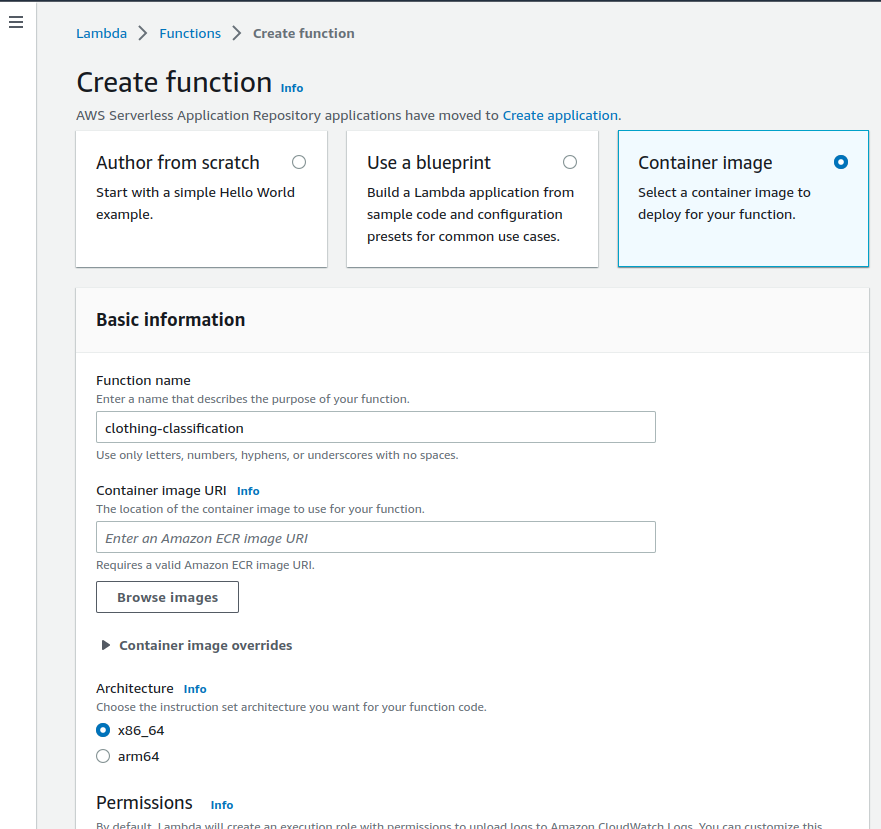

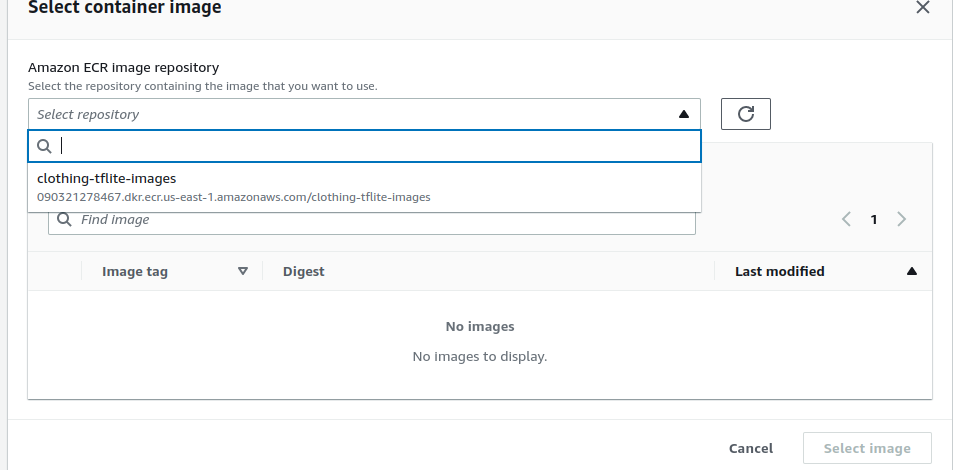

We now have our docker container loaded onto the AWS platform. It is time to create a function on AWS that will link to the docker container. Let’s choose “Container image,” give it a name, in this case, “clothing-classification,” and the next step is choosing our Docker image from a “Container Image URI” using the “Browse Images” button. Finally, we are ready to create the function using the “Create function” button.

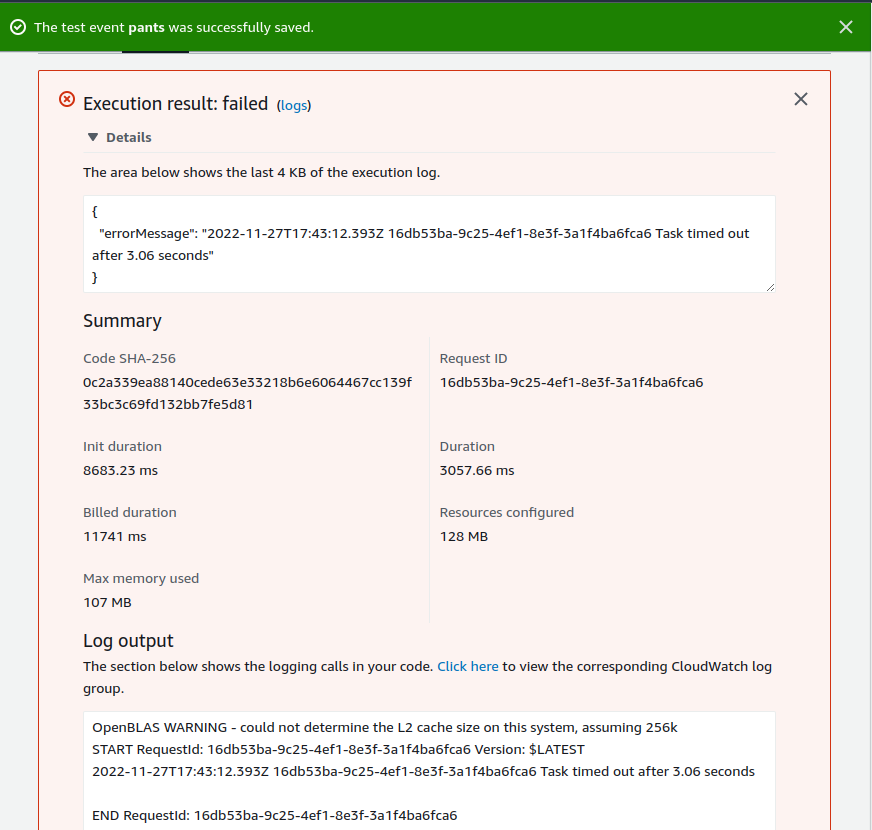

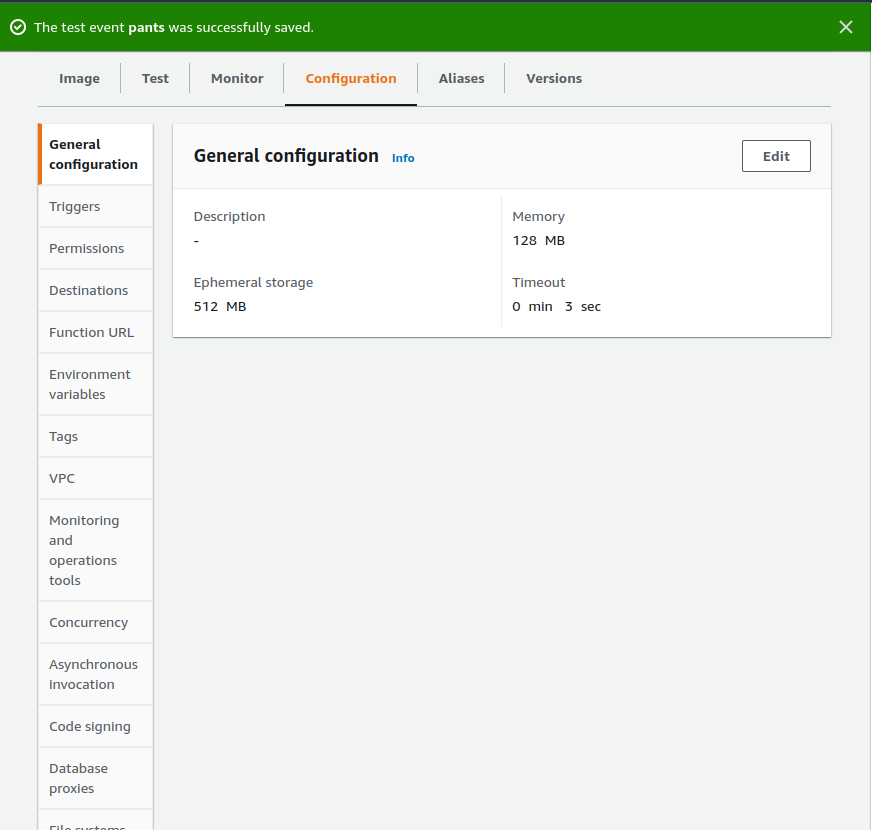

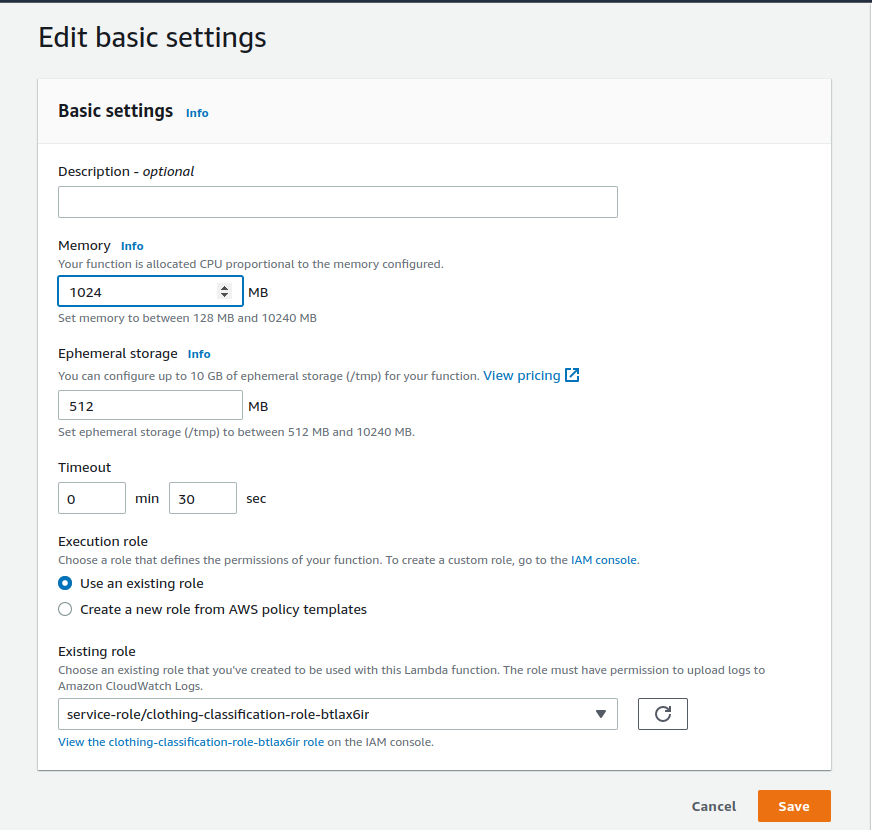

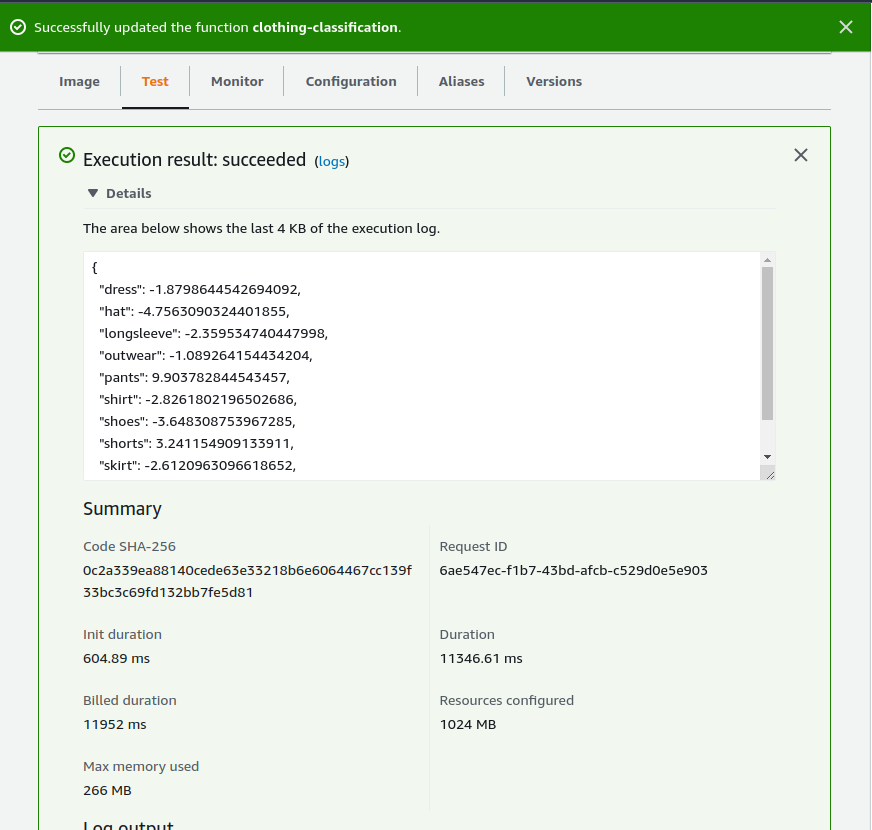

Our “clothing-classification” function has been created, and now we can test it by clicking on the “Test” tab. We previously used the URL of the image of the pants, and we will also use that here, and now we can click the “Test” button. We get an error stating that the task timed out after roughly 3 seconds, which is the default time out and isn’t sufficient for our purposes, so we will change that using the “Configuration” tab. Then we will click the “Edit” button, increase the time to 30 seconds, add a little more memory, and click “Save.” Finally, we return to the “Test” tab and rerun our test.

We have a successful test that shows our predictions. It took roughly 11 seconds to initialize, load, and run the first time we tested. The second time of testing, it only takes about 2 seconds.

API Gateway: exposing the lambda function

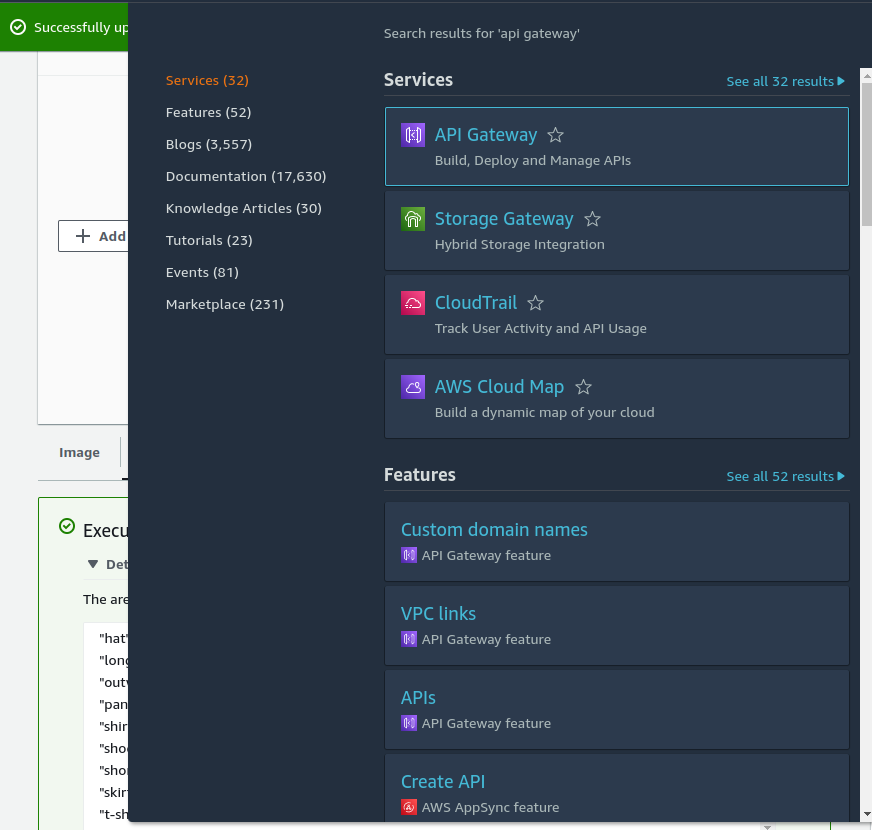

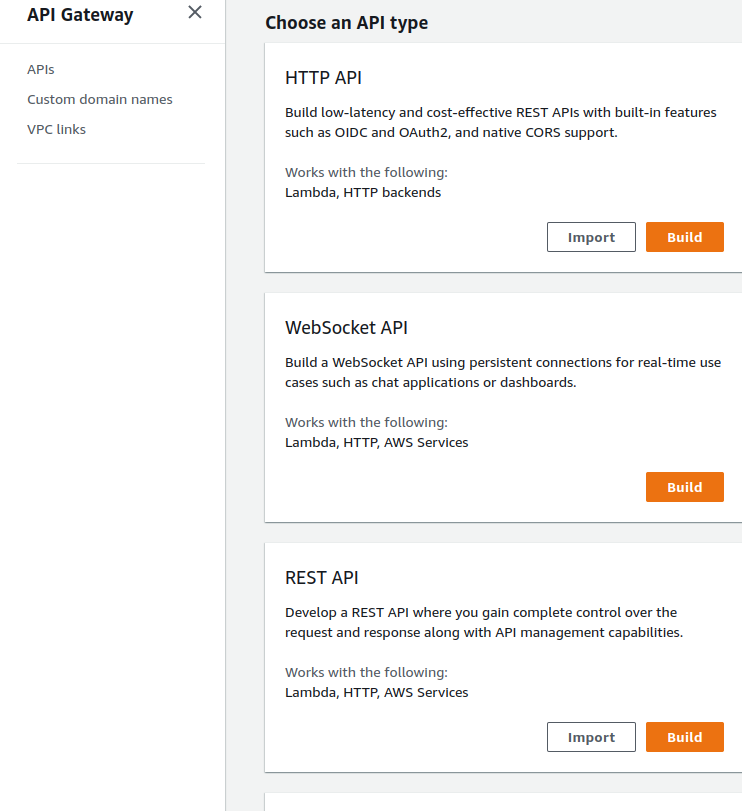

Our next step will be to expose our lambda function as a web service, and we will use an API gateway. We can search for “API gateway” to find this service. We will choose “REST API.”

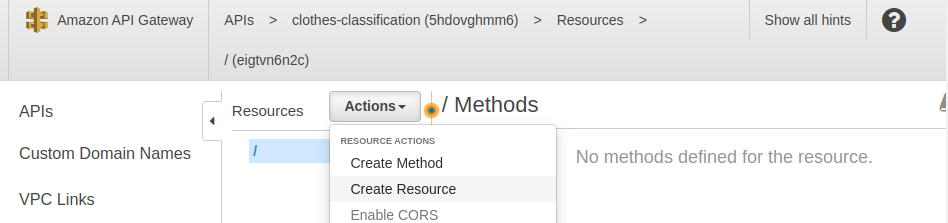

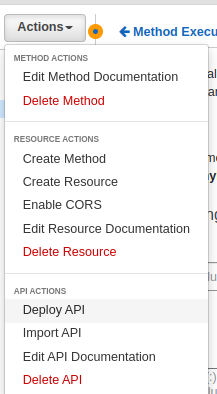

If this is your first time, an example API will be shown. We will select “New API,” name our API and then click “Create API.” Clicking the “Actions” drop-down button will allow us to choose “Create Resource.”

In the “Resource Name” field, enter a name. I will use “predict” here to use the same convention we have been using in the zoomcamp course. Then click the “Create Resource” button.

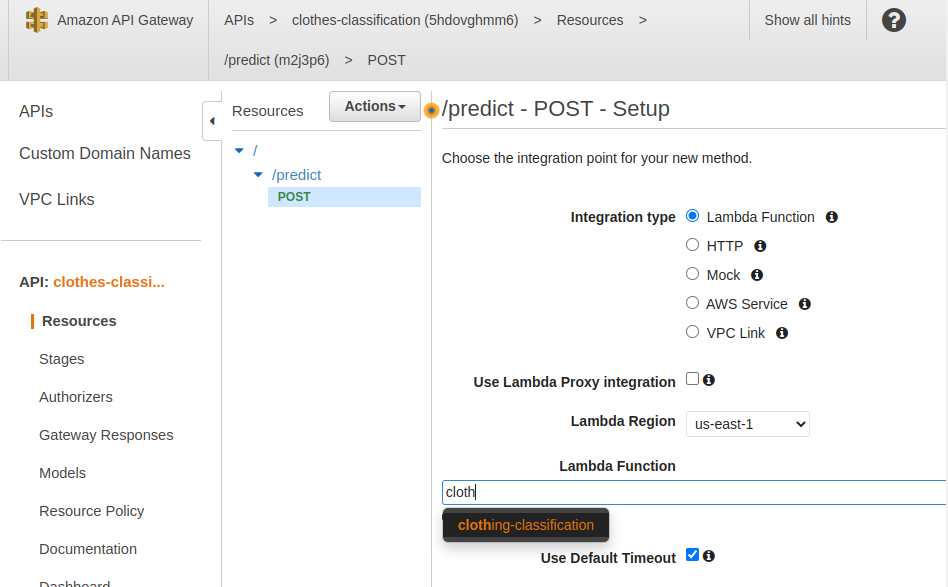

We will have to add a method to our resource, and we can do that by clicking on the “Actions” button and selecting “Create Method,” and then choosing “POST” as our method type. Now we will select “Lambda Function” and enter “clothing-classification” as the Lambda Function we will use.

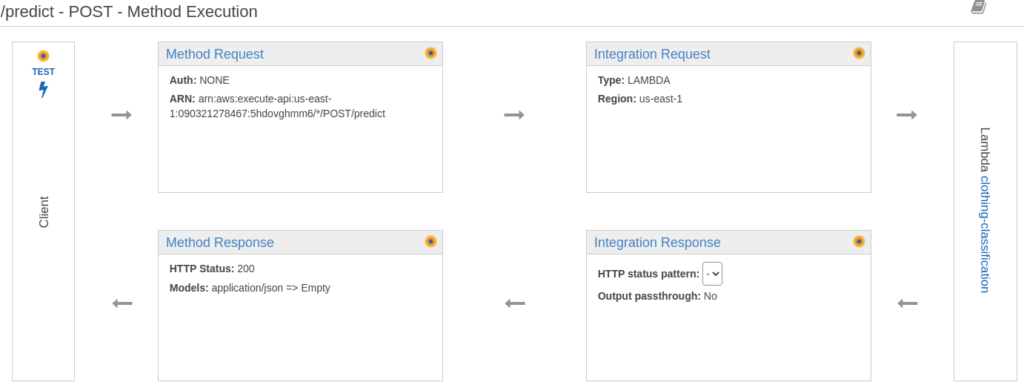

We will see a Permission window, and we can select “OK.” Then we will see a diagram of the Method-Request-Response process.

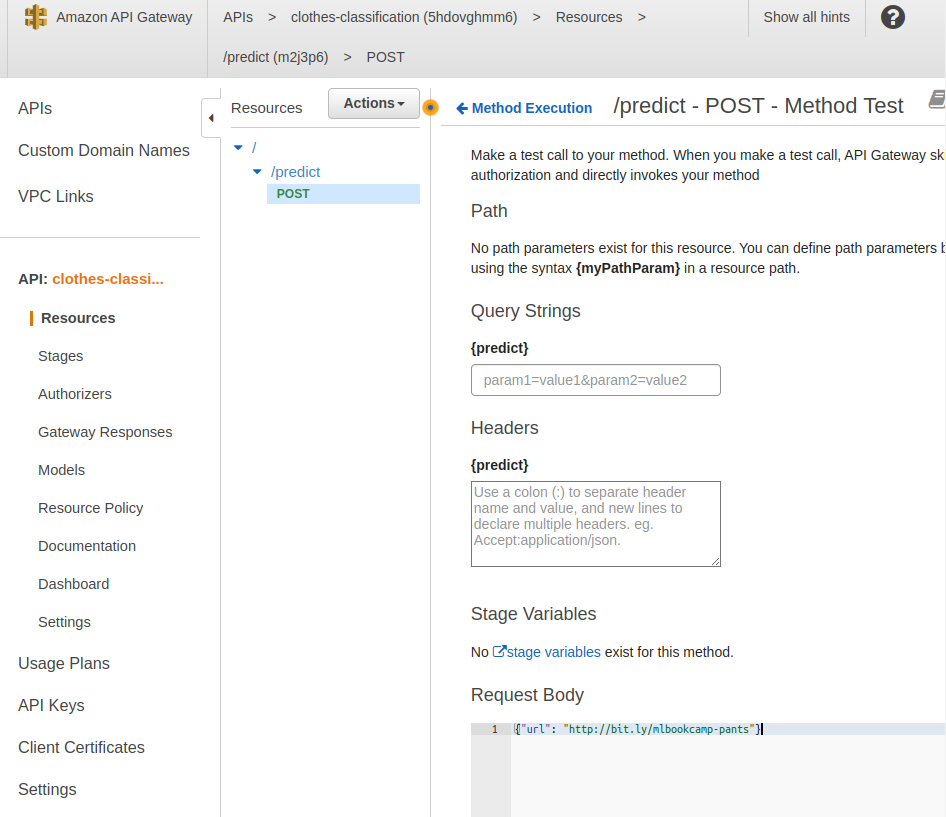

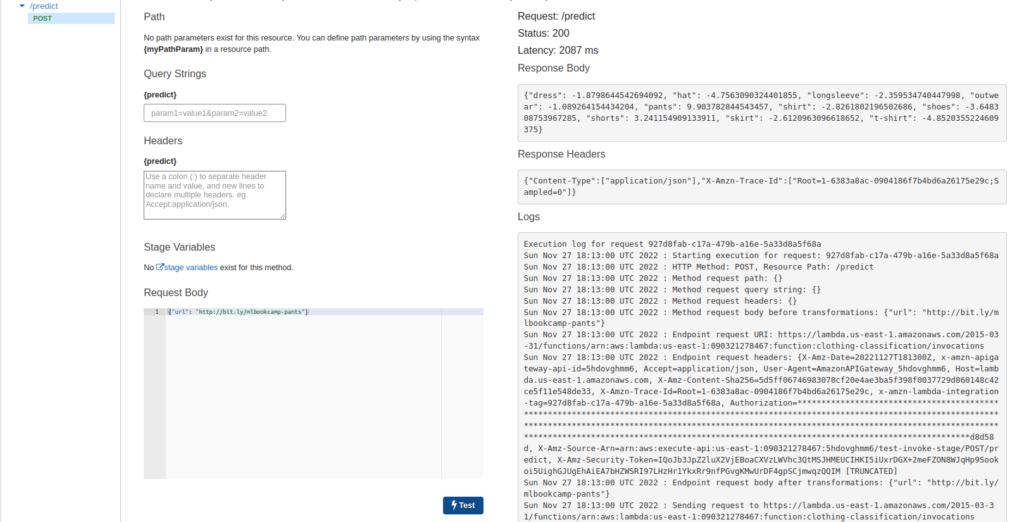

We have to set up the test using our diagram’s “Test” link. Then, in the request body, we will again enter our JSON URL information for the image of the pants. Then we can click the “Test” Lightning bolt button.

The results are the body of the response showing our predictions.

It is time to deploy this API. Using the “Action” button again, we can select “Deploy API.” Using “New Stage,” we will use the test as our “Stage name” and then click on “Deploy.”

We are now presented with the URL where our function resides. The URL doesn’t contain our endpoint, “/predict”, so we will add that to the end of the URL. Then, we will copy and paste that function into our test.py file and hash out our local URL. When we run the test.py file, we will see our predictions.

import requests

#url = 'http://localhost:8080/2015-03-31/functions/function/invocations'

url = 'https://0000000000.execute-api.us-east-1.amazonaws.com/test/predict'

data = {'url': 'http://bit.ly/mlbookcamp-pants'}

result = requests.post(url, json=data).json()

print(result)Now our lambda function is exposed as a web service.

Leave a Reply